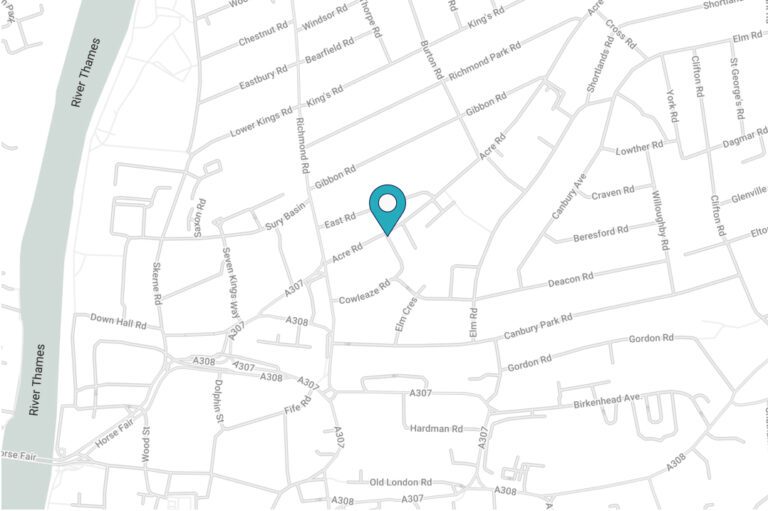

This week a driver in Hampton made the national press for eating a bowl of cereal whilst at the wheel – just a day before Jaguar Land Rover’s announcement of its new remote-controlled vehicle and hot on the heels of news that BMW will put driverless cars on the roads in China by the end of the year.

Does behaviour like this mean we’re really too busy to just…drive? Could the ‘most important meal of the day’ slot in at some other time, ideally when one is not at the helm of a car? Evidently not; it is time for someone, or something else, to take the wheel.

The idea of controlling a vehicle autonomously, removing the human liable to error and/or cravings for a bowl of Country Crisp, is nothing new. The concept was mooted almost a century ago, with trials taking place in the 1950s and 1980s In May last year Google unveiled its prototype self-driving car. The bubble-like Googlemobile, which lacked steering wheels and a pedal, hinted at things to come.

Indeed, there are huge advantages. Increased car-sharing, the reduced requirement for car parks, access to a car for those unable to drive, the ability to eat cereal whilst travelling in a motor vehicle and many more. Executing the idea properly will bring levels of freedom only recently conceivable with the advent of Uber, albeit without the awkward driver chat.

However, question marks remain over the feasibility of building the suitable infrastructure, not to mention the Toytown looks of Google’s concept and the desire to give up driving which, for some, is one of life’s greatest freedoms.

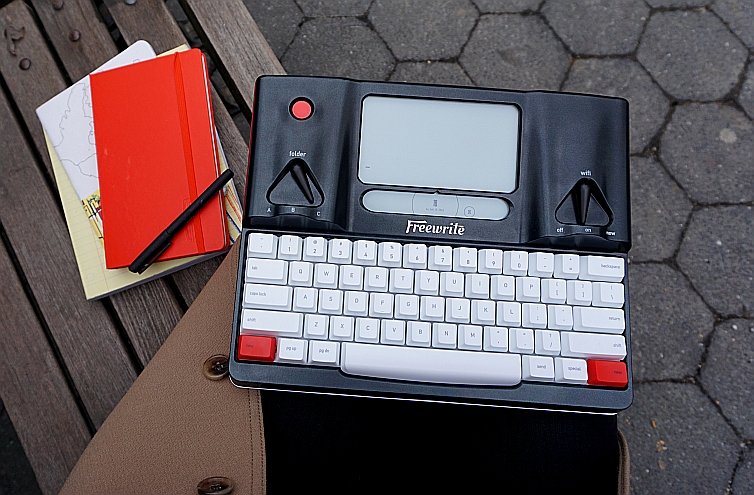

JLR’s smartphone app has been designed to carry out manoeuvres at very low speeds, taking the tedious parts out of driving, the trials and tribulations of a tight car park, for example, or in the case of Land Rover, negotiating tricky off-road terrain. The technology is supremely cool, though tapping a smartphone screen means it still treads the boundary between full autonomy and full control.

Extensive tests have shown that, whilst vehicles will be fully autonomous one day, we’re not there yet. Volvo’s self-parking car recently ploughed into a journalist because it had not been fitted with pedestrian detection functionality, this was another (fairly essential) cost option, while the twelfth accident involving a Google driverless car was also announced last week. Human drivers may be prone to human error, but so is human engineering.

Extensive tests have shown that, whilst vehicles will be fully autonomous one day, we’re not there yet. Volvo’s self-parking car recently ploughed into a journalist because it had not been fitted with pedestrian detection functionality, this was another (fairly essential) cost option, while the twelfth accident involving a Google driverless car was also announced last week. Human drivers may be prone to human error, but so is human engineering.

Recent surveys suggest that at least half of individuals are against relinquishing control of their vehicles; this uncertainty is something we touched upon when Google first launched its first 100 test vehicles. The erratic tendencies of the technology and their vulnerability to hacking could well prolong the introduction of fully autonomous vehicles for some time.

That’s not to say elements of autonomy can’t have real benefits. Tech that steps in at moments of human error, standardised features such as autonomous braking and steering wheel sensors for those prone to snacking dangerously, will make our roads safer. However, before we let go of the wheel altogether, our understanding of technology and our future requirements needs to be optimal…